From good to great: AI-powered Aiven for PostgreSQL server tuning

How DBtune AI boosts performance on Aiven.

Getting the most out of Aiven for PostgreSQL isn't just about using a managed service — it’s about tuning it to match your actual workload, reducing latency, reducing cost and improving reliability. While Aiven provides excellent defaults, as highlighted in this recent blog, they’re inherently generic and leave performance potential untapped. That’s where DBtune comes in.

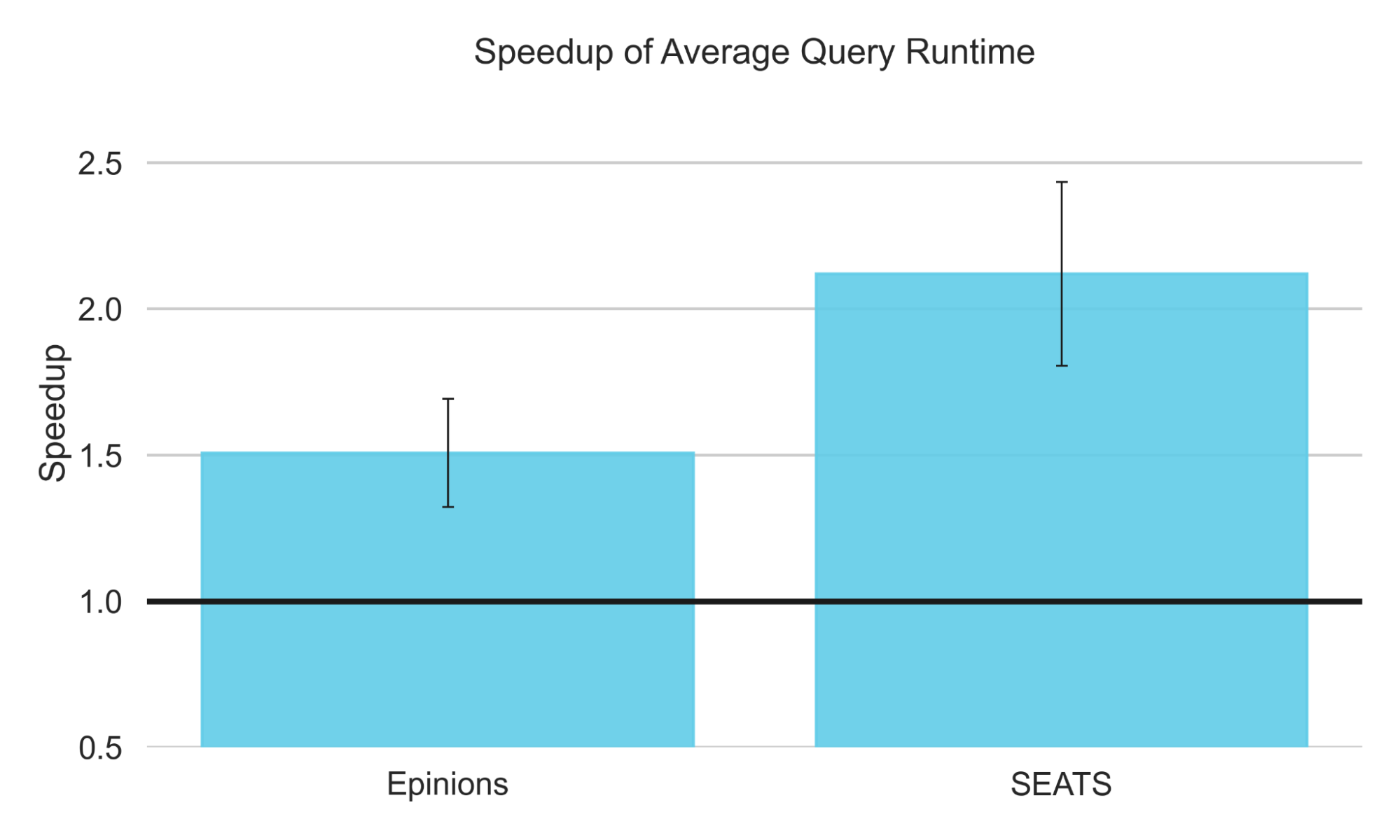

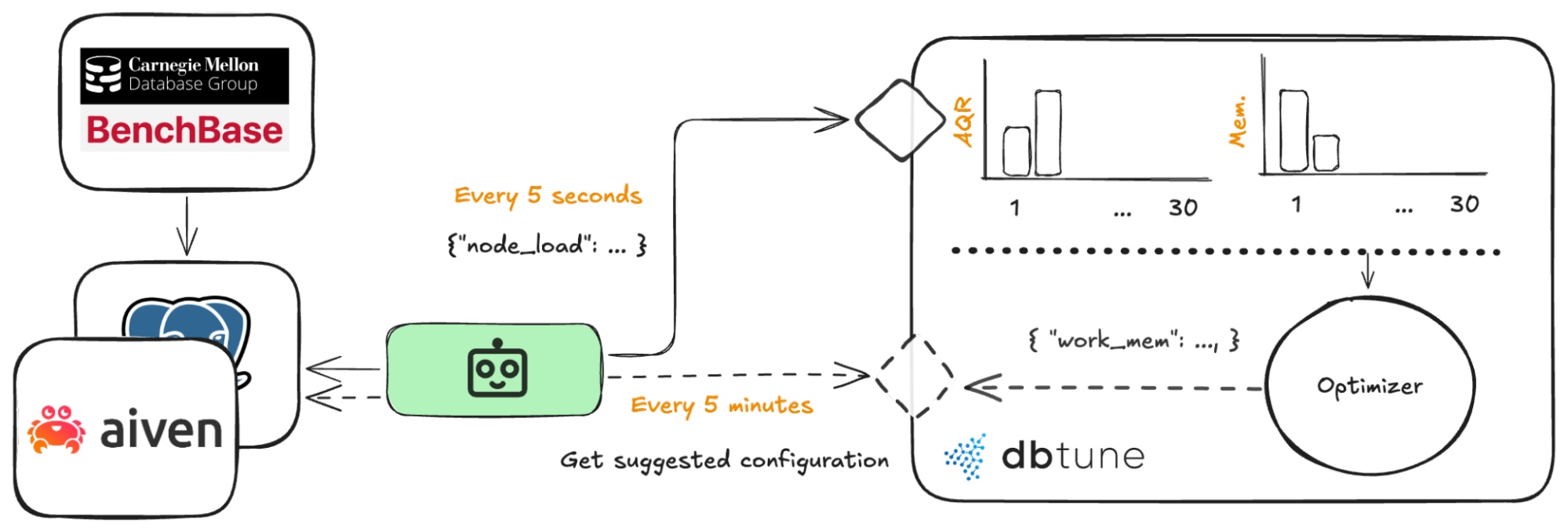

In this article, we show how DBtune’s AI-powered tuning engine optimized PostgreSQL server parameters on Aiven and delivered up to 2.15x speedup in realistic benchmarks. Whether you’re handling heavy reads or mixed transactional workloads, this guide will walk you through the results—and how you can apply them yourself.

Curious what performance gains you can unlock? Start tuning your Aiven for PostgreSQL instance, with DBtune — try for free for up to 3 databases!

The speedups on two BenchBase benchmarks, SEATS (mixed workload) and Epinions (read-heavy), relative to Aiven for PostgreSQL defaults, indicated by the horizontal line at 1. All runs were on the Startup-16 plan with 4 vCPUs and 16 GB of RAM. Error bars indicate the standard error from the mean of 3 runs.

How DBtune optimizes Aiven for PostgreSQL using AI

DBtune is an AI-powered service designed to automate PostgreSQL server parameter tuning. By removing the need for trial-and-error, DBtune significantly reduces the time and cost of manual tuning, making high-performance database management accessible, even to teams without the resources for a dedicated database administrator.

DBtune utilizes advanced machine-learning-driven optimization methods to analyze a database’s workload and suggest optimal configurations. It takes a fundamentally different approach to database tuning compared to heuristic-based tools, such as PGTune, Cybertec PostgreSQL Configurator or EDB Postgres tuner. Similar to how how an experienced database administrator might manually tune a database, DBtune employs an iterative optimization process that:

- Tunes PostgreSQL server parameters based on real-time performance and hardware resource usage (CPU, memory, disk I/O).

- Evaluates the impact of changes against your chosen optimization target.

- Iteratively refines the configuration to maximize performance safely.

A complete DBtune tuning session involves 30 iterations, where the system tests different configuration combinations before identifying what it determines to be the optimal setup.

To find the configuration for the optimal workload, the system carefully explores the range of possible parameter values. Its optimization goal is either to minimize the average query runtime (AQR), maximize transactions per second (TPS), or minimize the average query runtime of the 99% query response times (akin to P99).

DBtune offers several key advantages:

- Automation: Reduce both cost and time of expensive performance optimization.

- Workload optimization: Tailored to your workload for increased performance.

- Production safety: Prevents instability and database downtime.

DBtune currently tunes 13 parameters for community PostgreSQL. 11 of these parameters only need a server reload with no server downtime and two parameters require a server restart. Because the number of tunable parameters may increase, please consult the DBtune documentation for the most updated information. Users also have the option to tune only the parameters that can be adjusted with a reload through a setting in the web application.

- work_mem

- random_page_cost

- seq_page_cost

- checkpoint_completion_target

- effective_io_concurrency

- max_parallel_workers_per_gather

- max_parallel_workers

- max_wal_size

- min_wal_size

- bgwriter_lru_maxpages

- bgwriter_delay

- shared_buffers (restart)

- max_worker_processes (restart)

Implementing DBtune on Aiven PostgreSQL: Setup & current limitations

Because Aiven for PostgreSQL is a managed service, it includes parameter customizations which are unique to considerations in the Aiven tuning process. This section documents how DBtune interacts with the database service, as well as some limitations on tuning methods. In summary, Aiven’s current API only allows tuning a subset of parameters, and tuning Aiven requires some additional server restarts than what would be done tuning community PostgreSQL. The table below lists the nine user-tunable parameters through the Aiven API or directly through PostgreSQL. First, let us take a look at how we can change parameters.

Standard PostgreSQL offers three primary ways to change database parameters:

- Directly edit a

postgresql.conffile. - Run an

ALTER SYSTEM SET <parameter> = <value>;command. - Use

ALTER DATABASE <dbname> SET <parameter> = <value>;, which changes parameters only at the database level, not the cluster level.

However, Aiven for PostgreSQL restricts these methods due to the managed nature. Instead, you can:

- Use Aiven's API:

PUT /project/{project}/service/{service}— This is the primary way to change service-level parameters. - Connect directly to your database instance and use ALTER DATABASE commands for database-level parameters.

Let’s see what an API request looks like.

$ curl --request PUT \

--url 'https://api.aiven.io/v1/project/{project}/service/{service}' \

--header 'Authorization: Bearer {token}' \

--data '{

"user_config": { # The 2 service level parameters offered

"shared_buffers_percentage": 30,

"work_mem": 4096,

"pg": { # Other PostgreSQL parameters...

"bgwriter_delay": 400,

"bgwriter_lru_maxpages": 100,

"random_page_cost": 1.5,

}}}'

{"message":"Invalid 'user_config': Invalid input for pg: Additional properties are not allowed (random_page_cost was unexpected)","status":400}

Damn. It looks like we are unable to change some parameters via their API. So let’s try connecting directly to the PostgreSQL instance and using the ALTER SYSTEM SET command, as we normally would.

defaultdb=> ALTER SYSTEM SET random_page_cost = 1.5;

ERROR: permission denied to set parameter "random_page_cost"

No luck, … what about trying to adjust the database-level parameters?

defaultdb=> ALTER DATABASE defaultdb SET random_page_cost = 1.5;

ALTER DATABASE

Alright, now we’re getting somewhere! However, there’s an important caveat to keep in mind when running ALTER DATABASE commands, and that is that changed parameter settings will only affect new database sessions, not pre-existing ones. If we use the SHOW command within the same session where we made the change, we’ll see that the setting hasn’t taken effect yet.

defaultdb=> SHOW random_page_cost;

random_page_cost

--------------------------

1.0

For the parameter to take effect, the session must be refreshed by re-connecting. Since we don’t control the BenchBase benchmark session, we cannot refresh this directly. So while the agent could simply reconnect to apply the changes, it can’t force the benchmark session to do so. Therefore, we restart the database to force the benchmark to connect. This wouldn’t have been required if we could use the command ALTER SYSTEM SET.

To be clear, while Aiven does not require a restart for these parameters, the nature of how we configure these parameters through ALTER DATABASE requires all sessions to be re-established. We chose to restart the database to make this happen.

Although we could configure some parameters via the Aiven service, and others at the database level, some parameters are still inaccessible through the Aiven API. For reference, this page offers a good overview of community PostgreSQL server parameters and their defaults. The table below summarizes the complete set of the 13 parameters that DBtune tunes and compares them with the parameter set we found after running SELECT name, setting, boot_val, reset_val from pg_settings; and testing different configuration possibilities.

| Parameter | How to modify it in Aiven | Requires restart in PostgreSQL? | Requires restart in Aiven? | Aiven default | PostgreSQL default | |

|---|---|---|---|---|---|---|

| 1 | work_mem | Aiven API | No | No | ~13MB (1 + 0.075% RAM) | 4 MB |

| 2 | bgwriter_delay | Aiven API | No | No | 200 | 200 |

| 3 | bgwriter_lru_maxpages | Aiven API | No | No | 100 | 100 |

| 4 | max_parallel_workers | Aiven API | No | No | 8 | 8 |

| 5 | max_parallel_workers_per_gather | Aiven API | No | No | 2 | 2 |

| 6 | shared_buffers | Aiven API | Yes | Yes | 3198MB (20% of RAM) | 128MB |

| 7 | effective_io_concurrency | ALTER DATABASE | No | Yes | 144 | 1 |

| 8 | random_page_cost | ALTER DATABASE | No | Yes | 1 | 4 |

| 9 | seq_page_cost | ALTER DATABASE | No | Yes | 1 | 1 |

| 10 | max_worker_processes | Aiven API, but [^1] | Yes | Yes | 8 | 8 |

| 11 | checkpoint_completion_target | Can’t modify | No | – | 0.9 | 0.9 |

| 12 | max_wal_size | Can’t modify | No | – | 17583 MB | 1024 MB |

| 13 | min_wal_size | Can’t modify | No | – | 80 MB | 80 MB |

[^1] We can change max_worker_processes using Aiven’s API. However, it is unclear why, but we can only ever increase this value in Aiven. Decreasing it below its current value receives an error.

Understanding Aiven for PostgreSQL’s default configuration

The existing Aiven default configuration will serve as the baseline for performance improvement. Aiven has customized some of the default parameter values compared to community PostgreSQL, and four of the parameters that DBtune can tune have these Aiven customizations.

| Parameter | Aiven default | PostgreSQL default |

|---|---|---|

work_mem | ~13MB (1 + 0.075% RAM) | 4 MB |

shared_buffers | 3198MB (20% of RAM) | 128MB |

effective_io_concurrency | 144 | 1 |

random_page_cost | 1 | 4 |

-

effective_io_concurrency— This measures disk I/O concurrency and controls PostgreSQL's parallel I/O operations and can be important to tune for your hardware. Because Aiven allocates your disk, they ensure this parameter is adjusted to match the machine type you've requested. -

random_page_cost— This is a heuristic time estimate used by the query planner to determine the cost of loading a random page from the disk. It is well-known that the default of 4 is designed for HDD disks, while Aiven’s default setting is 1, which is a usual value specified for SSDs. -

shared_buffers— This defines the shared memory PostgreSQL uses for its table and index operations. Notably, modifying this parameter requires a restart for changes to take effect, which can take between 2 and 10 seconds on Aiven in our benchmarks. Aiven allows you to configure this via theirshared_buffers_percentagevariable, and they explain its behaviour as the following:> Percentage of total RAM that the database server uses for shared memory buffers. Valid range is 20-60 (float), which corresponds to 20% - 60%. This setting adjusts the shared_buffers configuration value.

A percentage-based approach is much more intuitive for an end user, nice one!

-

work_mem— Aiven defines this as the following:> Sets the maximum amount of memory to be used by a query operation (such as a sort or hash table) before writing to temporary disk files, in MB. Default is 1MB + 0.075% of total RAM (up to 32MB).

To clarify a point we initially found confusing, Aiven’s default setting is capped at 32MB, but Aiven does not impose a 32MB limit on the

work_memparameter itself.

Overall, Aiven for PostgreSQL appears to provide reliable, good heuristic defaults. Although it is often inconsequential, it could be advantageous, though not usually critical for Aiven for PostgreSQL to set max_worker_processes and max_parallel_workers with the system’s vCPU count, which is 4 on the Startup-16 plan.

Experimental environment and benchmarks

Experimental infrastructure

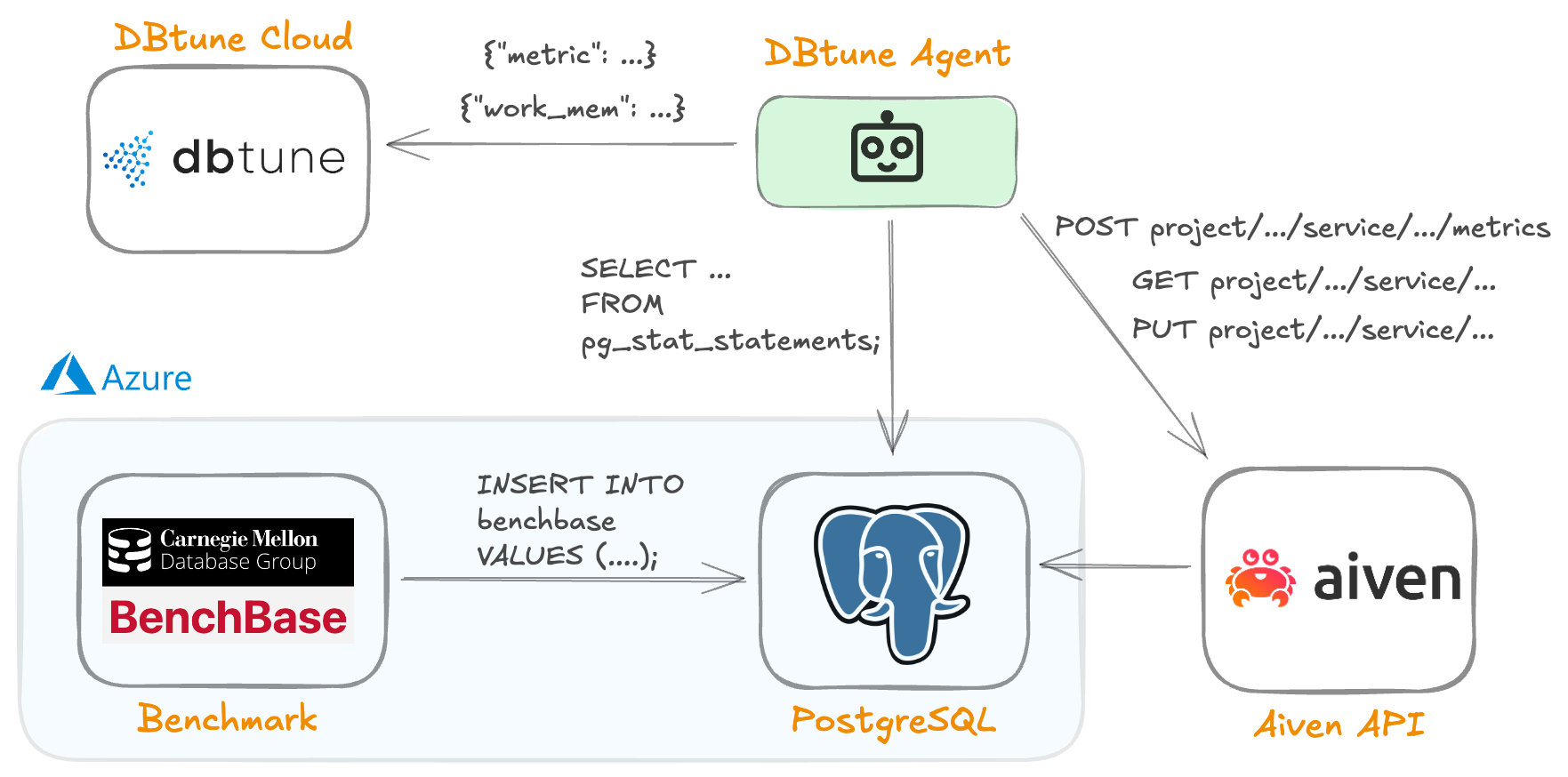

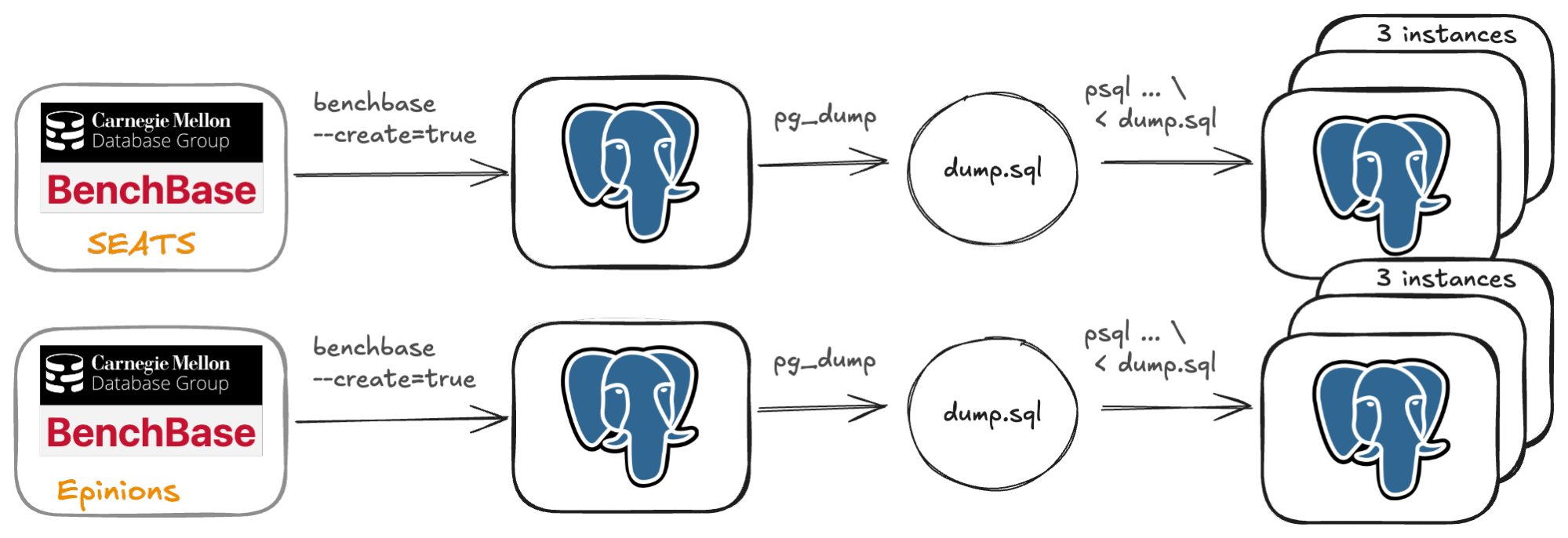

We used an environment with four main components, plus Aiven’s API layer:

-

Benchmark: The VM running the workload to generate queries. Notably, this VM is separate from the database, ensuring the database runs in isolation. This emulates a real-world workload hitting your database. To reproduce our setup, you can follow our guide on setting up a synthetic workload. We connect each benchmark to a database, using the connection URL to run queries.

Details: Ubuntu 24.04 LTS; Azure east-us: Standard_D4ads_v6; 4 vCPUs, 16 GB RAM, 220 GB disk; Runs BenchBase to simulate real workloads. -

Database: The VM provisioned through Aiven for PostgreSQL. In this case, we’re using the generous 30-day free trial, specifically a Startup-16 instance. Following DBtune’s documentation on Aiven for PostgreSQL, we install the

pg_stat_statementsextension.

Details: PostgreSQL 16.8; No read-replicas; 4 vCPUs, 16 GB RAM, 350 GB SSD storage; Azure: east-us. -

Agent: Monitors database activity, using Aiven’s API to monitor system metrics and querying

pg_stat_statementsdirectly. The agent was run on a local developer machine, regularly syncing metrics and getting responses with recommended configurations from DBtune cloud. The agent is open-source, pre-compiled and written in Go.

Details: Locally running DBtune open-source Agent 2.1; internally uses aiven/go-client-codegen -

DBtune cloud: The web-app interface to monitor system metrics, begin tuning and see how the tuning session is performing. To see how this looks once setup, we provide screenshots in our documentation. This is where the optimizer runs and new recommended configurations are created.

Details: 😉

Illustration of the environment setup with arrows indicating instigators of communication. The database instance is provisioned through Aiven for PostgreSQL to be in Azure, with the benchmark co-located in the same us-east region to reduce latency and maximize pressure on the database’s resources. The DBtune open-source agent is responsible for (1) monitoring the database both through direct connection and through Aiven’s API, (2) reporting metrics back to DBtune cloud in regular intervals and (3) if a heartbeat response from DBtune recommends a new configuration, apply it through Aiven’s API service layer.

Benchmarks

To demonstrate DBtune’s effectiveness, we employ a well-known benchmark suite, BenchBase, developed by the Carnegie Mellon Database Group. These are the characteristics of the two benchmarks used:

- SEATS simulates an airline ticketing system with complex flight searches, reservations, and customer management. This benchmark represents demanding enterprise applications that handle many concurrent users with a mixed workload. SEATS uses a 60/40 read/write ratio, making it a balanced test for transaction processing.

- The Epinions benchmark based on Epinions.com consumer review website, models cross-user interactions like writing product reviews. It includes nine different transactions, four affecting only user records, four affecting only item records, and one affecting all database tables. The workload is mixed but primarily, read-heavy, similar to content management and social media applications.

Experiment setup

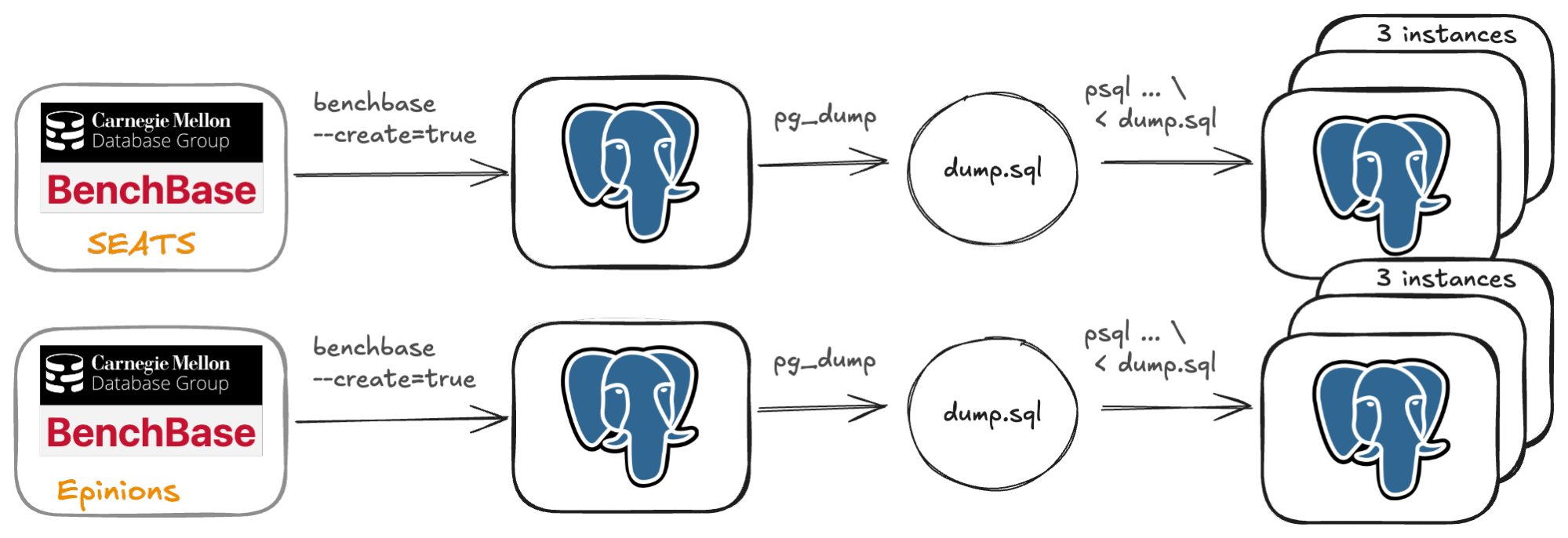

We start by setting up an Azure instance with BenchBase, and provisioning three Aiven for PostgreSQL Startup-16 instances (one per benchmark). Next, we configure the BenchBase workers with a connection URL to their respective PostgreSQL database, where they prepare the schemas and relations before then populating the tables.

The preparation takes roughly 6-14 hours, varying by benchmark. After the database preparation, we use pg_dump to export a dump which we can apply to newly created databases. These dumps are only needed once, and can be re-used afterward.

To perform three repetitions for both benchmarks, we provisioned a total of six Aiven for PostgreSQL instances.

The above figure illustrates our process by which we prepare databases for tuning. For each benchmark, we use one Aiven for PostgreSQL database and one Azure worker to generate the benchmark’s initial data. Once this initial data generation is complete, we export a pg_dump of the database. Then, we use it to create three new Aiven for PostgreSQL databases per benchmark, resulting in six database instances.

Next, we set up a BenchBase worker, ready to execute the specific benchmark, and configure an agent process for monitoring each database instance.

An important step before tuning begins is to allow the database to warm-up. Because caches significantly influence performance profiling, we execute the benchmark against the database for two hours. This duration allows the caches to populate and for data access patterns to stabilize, simulating a longer living workload.

Now, we are ready to begin the tuning process. For this step, we need one agent per database. We provide each agent with relevant credentials and connection URLs. These agents have minimal resource requirements and can easily be run on your local machine. Each agent connects to the DBtune cloud service, where it starts reporting system and database performance metrics. Once the warm-up period has finished, we initiate the tuning.

Each database instance has a paired BenchBase worker for benchmark execution and a DBtune agent for monitoring and tuning. The benchmark is executed against the database for a two hour warmup before tuning. Then, each agent monitors its database and communicates with DBtune cloud to get suggested configurations which it will apply.

Tuning methodology for Aiven PostgreSQL

In our recommended setting, we tune the PostgreSQL instances in the following manner:

- Establish a baseline: This represents the default configuration of the database before we begin tuning. It allows us to calculate the performance improvement (speedup) achieved through tuning.

- Monitor each configuration: This also includes the baseline configuration for a fixed time interval. We refer to this as a tuning iteration. In our experiment, we set DBtune to use a five minute window for each tuning iteration, which is the standard duration we use for transactional workloads. This window is large enough to capture the performance characteristics of the applied configuration.

- Get the next configuration: After each five minute interval, the agent queries the DBtune cloud service to get the next suggested configuration to apply to the database.

- Repeating the process: This is done until we have 30 configurations complete. This process takes 2h30m in total.

This figure illustrates the interactions for the tuning session between the DBtune agent and the DBtune cloud. The agent queries the database and system for metrics every five seconds, and reports these back to DBtune cloud. Every five minutes, the cloud generates a new suggested database configuration. The agent receives and applies this configuration from the cloud upon the next configuration.

Safety guardrails

During tuning, if DBtune detects excessive memory consumption from a configuration, it will activate memory guardrails and quickly revert to the baseline to maintain safe database operations. If the observed performance drops below an acceptable threshold, DBtune triggers performance guardrails, applying a new configuration, and learning from the observation.

Performance metrics for evaluating Aiven tuning

There are many different metrics that can be used to evaluate the performance.

For this blog post, we mainly considered average query runtime (AQR), which served as the metric for determining the optimal configuration.

AQR represents the average query runtime for all queries during a tuning iteration. Specifically, at every five second interval, we compute AQR using the formula:

AQR = time_spent_executing_queries / number_of_queries,

yielding a list of n AQR values AQRs = [AQR_1, … AQR_n]

The overall AQR for a configuration is then the average of this list of values:

AQR_config = sum(AQRs) / n.

Benchmark results: Tuning Aiven for PostgreSQL with DBtune

In summary, the aggregated results across the three runs was a 2.15x speedup in AQR for SEATS and a 1.51x speedup for Epinions. These results were achieved through full restart tuning, which allows multiple server restarts during the tuning process to optimize all parameters and achieve maximum possible performance improvement for the Aiven for PostgreSQL database. We used restarts for this experiment so that we could also tune the parameters that need a refresh in Aiven as described in the previous sections.

The next sections compare a sample run from each benchmark contrasting the best configuration with the baseline of Aiven’s defaults . It’s also important to note that not all parameter changes have a significant impact on final performance, and their effect is highly dependent on the specific workload and the hardware on which the benchmark is executed.

For both benchmarks, we’ll compare the best found configuration to Aiven’s defaults. We will highlight what we believe to be the most important changes compared to the baseline, in green, and parameters that require more investigation in blue. A formal statistical feature importance analysis to automatically identify the parameters most strongly correlated with AQR will be left for future research.

Case study: SEATS benchmark

| SEATS | Aiven default | DBtune best (2.81x) |

|---|---|---|

bgwriter_delay | 200ms | 50ms |

bgwriter_lru_maxpages | 100 | 500 |

effective_io_concurrency | 144 | 200 |

max_parallel_workers | 8 | 2 |

max_parallel_workers_per_gather | 2 | 2 |

random_page_cost | 1 | 8 |

seq_page_cost | 1 | 1 |

work_mem | 13312 kB | 12584 kB |

shared_buffers_percentage | 20% (3198MB) | 40% (6396MB) |

Initially, we see that bgwriter_delay is reduced to 50ms (from 200ms, a 4x decrease), and bgwriter_lru_maxpages is increased to 5x the default. These parameters control the frequency at which dirty pages are written to disk, and the amount of memory that is written during each background writer execution, respectively. Since the best configuration from each run used similar settings, these parameters appear to be important for tuning.

We also see the best run set max_parallel_workers to 2. Given that the Startup-16 plan provides four vCPUs, we might expect setting this parameter to four would be even better!

However, in the context of the SEATS benchmark, where the runtime of queries are in the range of 4-6ms, the overhead associated with managing parallel processes can outweigh the performance gains achieved through parallelism.

We also observe that random_page_cost is set to a relatively high value, in fact, significantly higher than PostgreSQL’s default settings. This parameter influences the query planner’s decisions, specifically, its preference for index scans (favored by lower values) versus sequential scans (favored by higher values).

The high setting for random_page_cost is particularly unusual and we will investigate this further in future research. However, examining the DBtune dashboard across both well-performing and poorly performing runs, reveals that random_page_cost appears to have little impact on performance, especially within the context of this specific benchmark and machine configuration. We intend to incorporate automated feature importance analysis into an upcoming DBtune dashboard feature!

In summary, for the SEATS workload running on the Startup-16 plan, our analysis suggest that the most influential tuning changes involve:

- Decreasing

bgwriter_delay. - Increasing

bgwriter_lru_maxpages. - Reducing

max_parallel_workersto two.

Case study: Epinions benchmark

| Epinions | Aiven default | DBtune (1.74x) |

|---|---|---|

bgwriter_delay | 200ms | 200ms |

bgwriter_lru_maxpages | 100 | 100 |

effective_io_concurrency | 144 | 1 |

max_parallel_workers | 8 | 8 |

max_parallel_workers_per_gather | 2 | 0 |

random_page_cost | 1 | 0.5 |

seq_page_cost | 1 | 0.5 |

work_mem | 13312 kB | 12584 kB |

shared_buffers_percentage | 20% (3198MB) | 60% (9594MB) |

Observing the same parameters, we find the best configuration has retained the default value for both bgwriter_delay and bgwriter_lru_maxpages. This implies that the defaults were adequate for this benchmark, unlike SEATS, where these parameters significantly impacted AQR. Since both benchmarks ran on the same machine type, the difference in optimal values shows us that tuning is also workload-specific, not just hardware-specific.

You’ll also notice that the best configuration has an effective_io_concurrency of 1. While the effect of this parameter might be significant when considered in conjunction with the other parameter settings, our analysis of other configurations with this value set to its minimum of 1, reveals that their performance varied considerably. We can draw two possible conclusions from this: either this parameter has no impact on performance, meaning its value is effectively arbitrary or its impact is highly dependent on the value of all of the other parameters. In either case, manually tuning this parameter would be a difficult task. This highlights the value of methods like DBtune, where this complexity is automated.

However, the best run sets max_parallel_workers_per_gather to 0, disabling all parallel gather plans. This matches our previous observations that well performing configurations tended to disable parallel gathers, implying that parallel query overhead is a bottleneck for this benchmark as well.

While Aiven’s default random_page_cost of 1 is often a good setting for most workloads and modern hardware, this run implies that lowering it further may improve performance. This parameter is closely linked to seq_page_cost, and lower values generally seem better across our runs, but more analysis is needed to confirm if setting it to below 1 is what separates the best from the good configurations.

This leads us to the final parameter we consider key for optimizing this workload, shared_buffers_percentage. As is shown in this run, and observed across many configurations, a shared_buffers_percentage in the range of 50-60% appears to correlate loosely with improved AQR, especially when considering configurations where parallel gathers are disabled by setting max_parallel_workers_per_gather to 0.

To summarize, for the Epinions workload on the Aiven Startup-16 plan, disabling parallel gathers (max_workers_per_gather set to 0), and increasing shared_buffers_percentage to Aiven’s maximum yields a significant speedup over the baseline.

Current constraints of Aiven for PostgreSQL tuning

Perhaps the biggest limitation when it comes to tuning Aiven for PostgreSQL with DBtune, is that DBtune cannot fully tune all the parameters it’s capable of tuning in standard PostgreSQL.

While Aiven simplifies parameter configuration through their API, including managing restarts required by parameters such as shared_buffers, some parameters supported by DBtune for community PostgreSQL are not accessible.

Furthermore, we anticipated the observed performance of tuning to be greater. The need to force database restarts, despite the tuned parameters not requiring restarts in community PostgreSQL, likely hinders optimization. These restarts introduce temporary spikes in performance metrics, which can negatively impact DBtune’s optimization. In practice, we can mitigate this impact by using a longer tuning window. However, we opted for simplicity in our setup. We hope that in the future, these parameters can be tuned with the same ease as other database parameters on Aiven.

Of course, any analysis of this kind would benefit from running more benchmarks, using more random seeds, and varying the tuning setup. While this would be beneficial even now, we hope that once some of the current limitations are addressed, we could invest more resources into thoroughly evaluating DBtune’s full potential.

Key takeaways for Aiven tuning and future implications

Even with just a few tuning sessions, DBtune significantly improved average runtime performance on Aiven for PostgreSQL, achieving 1.5x and 2x speedup on two public benchmarks, all in just under 3 hours.

These improvements translate directly into lower cloud costs, faster application response times, and reduced operational overhead, making DBtune a powerful asset for both technical and business teams.

And yet, there’s still so much more to go. At this time DBtune only offers tuning for parameters supported through Aiven’s API but as Aiven evolves their services, so will DBtune's support. You can keep up to date with DBtune’s current support for your Aiven for PostgreSQL database in the documentation.

Get started with DBtune and experience 2x faster Aiven for PostgreSQL.

You can try for free with up to 3 of your databases!

Want to learn more?

We’re on a mission to deliver performance, reduce cost and to embrace a more sustainable future! Check out how to tune HammerDB or how DBtune compares against manual tuning at Stormatics, a company focused on delivering high-quality services for PostgreSQL.

FAQ

1. Can I see a DBtune demo?

Here you go!

2. What is Aiven for PostgreSQL and why does it need tuning?

Aiven for PostgreSQL is a managed PostgreSQL service that simplifies database operations in the cloud. While it comes with good preconfigured defaults, these settings are generic and not tailored to specific workloads. Tuning Aiven for PostgreSQL with tools like DBtune helps unlock better performance by optimizing server parameters based on actual usage patterns.

3. How much faster can Aiven for PostgreSQL become with tuning?

In public benchmarks, tuning Aiven for PostgreSQL with DBtune resulted in up to a 2.15x speedup in average query runtime. The actual improvement depends on workload and machine type.

4. Does tuning require restarting the Aiven for PostgreSQL database?

Some additional parameters, like shared_buffers, require a restart to take effect on Aiven. DBtune is designed to handle this intelligently and only restarts when the permission is explicitly granted by the user.

5. What’s the difference between DBtune and PGTune for Aiven for PostgreSQL?

PGTune is a static, heuristic-based configuration generator. DBtune, by contrast, is AI-driven, dynamic, and adapts its tuning based on actual runtime metrics. It performs iterative testing and evaluation, much like an expert database administrator would do, making it far more accurate and workload-specific.

6. Which Aiven for PostgreSQL parameters can be tuned by DBtune?

As of May 2025, DBtune offers up to 6 parameters to tune for Aiven for PostgreSQL. These are bgwriter_delay, bgwriter_lru_maxpages, max_parallel_workers, max_parallel_workers_per_gather, work_mem and if restarts are enabled, shared_buffers. However, we expect Aiven for PostgreSQL to make it easier for other parameters to be tuned through API access in the future. The DBtune documentation has the latest information.

7. What is required to tune Aiven for PostgreSQL with DBtune?

You only need to download and run the DBtune open-source agent, which connects to your database as you would, using the standard psql connection url. The agent can be run anywhere you like as long as it can connect to the database. You can manage the tuning from the DBtune platform. The DBtune documentation walks you through getting started.

8. Where can I learn more about tuning PostgreSQL with DBtune?

You can learn more by visiting the DBtune documentation, which includes step-by-step setup guides, parameter explanations, and advanced tuning strategies for Aiven for PostgreSQL environments.

9. How do you tune PostgreSQL on Aiven?

You can tune Aiven for PostgreSQL using either their platform or through Aiven’s API. For automatic tuning you can leverage DBtune to apply AI-optimized configurations that adapt to your workloads.

10. Can you tune Aiven for PostgreSQL without restarts?

Yes, some parameters can be tuned without restarts, only requiring what is called a configuration reload. However you might get some benefit from allowing for restarts. Tools like DBtune will let you choose your preferred option.